Health

Mental Health Experts Call for Evaluation of AI Tools in Care

Mental health professionals are now urging their colleagues to conduct thorough evaluations of artificial intelligence (AI)-based tools, particularly large language models (LLMs) used in mental health care. This call to action comes as millions of individuals engage with these AI systems to discuss their mental health concerns. Some mental health care providers have already integrated LLM-based tools into their daily workflows, raising questions about the effectiveness and safety of these technologies.

The growing presence of LLMs in mental health care has prompted a critical examination of their capabilities and limitations. According to a report from the American Psychological Association (APA), while these tools can facilitate access to mental health resources, they may lack the nuanced understanding and empathy that human practitioners provide. The report emphasizes the necessity for health providers to assess how these tools align with established clinical practices.

Integration of AI in Mental Health Care

The integration of AI technologies into mental health services has accelerated over recent years. Providers in the United States, United Kingdom, Australia, and Canada are increasingly adopting LLMs to enhance patient interaction and streamline care processes. For instance, some facilities have implemented chatbots powered by LLMs to provide immediate responses to patient inquiries, aiming to alleviate the burden on mental health professionals.

Despite these advancements, the APA and other health organizations caution that relying solely on AI for mental health support can be problematic. The effectiveness of LLMs in identifying complex mental health issues and providing appropriate responses is still under scrutiny. Experts highlight that while these models can generate informative content, they do not possess the emotional intelligence required to navigate sensitive conversations.

Concerns Over AI’s Role in Mental Health

Mental health professionals express concerns regarding the potential for AI tools to misinterpret patient needs or provide inadequate responses. The World Health Organization (WHO) has highlighted the importance of human intervention in mental health treatment, stating that “AI should complement, not replace, the human touch in mental health care.”

Moreover, there are ethical implications tied to the use of AI in mental health. Issues surrounding data privacy, consent, and the potential for bias in AI algorithms have raised alarms among practitioners. As LLMs learn from vast datasets, the risk of perpetuating stereotypes or providing misleading information remains a significant concern.

To address these issues, mental health professionals are encouraged to engage in ongoing evaluations of AI tools. This includes assessing their effectiveness, understanding patient feedback, and ensuring that AI systems are regularly updated to reflect current best practices in mental health care.

As the landscape of mental health support evolves, the dialogue surrounding the role of AI becomes increasingly important. While LLMs offer innovative solutions to expand access to care, professionals stress that the human element must remain central to mental health treatment. Balancing the use of AI with effective human oversight will be crucial in ensuring the well-being of patients seeking help.

In conclusion, as AI technologies continue to permeate the mental health sphere, the call for rigorous evaluation by professionals is more relevant than ever. The future of mental health care may well depend on finding the right integration of advanced tools and compassionate human care.

-

Science2 months ago

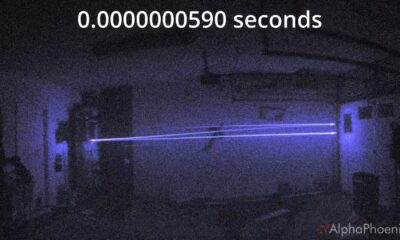

Science2 months agoInventor Achieves Breakthrough with 2 Billion FPS Laser Video

-

Health2 months ago

Health2 months agoCommunity Unites for 7th Annual Into the Light Walk for Mental Health

-

Top Stories2 months ago

Top Stories2 months agoCharlie Sheen’s New Romance: ‘Glowing’ with Younger Partner

-

Entertainment2 months ago

Entertainment2 months agoDua Lipa Aces GCSE Spanish, Sparks Super Bowl Buzz with Fans

-

Health2 months ago

Health2 months agoCurium Group, PeptiDream, and PDRadiopharma Launch Key Cancer Trial

-

Top Stories2 months ago

Top Stories2 months agoFormer Mozilla CMO Launches AI-Driven Cannabis Cocktail Brand Fast

-

Entertainment2 months ago

Entertainment2 months agoMother Fights to Reunite with Children After Kidnapping in New Drama

-

World2 months ago

World2 months agoIsrael Reopens Rafah Crossing After Hostage Remains Returned

-

Business2 months ago

Business2 months agoTyler Technologies Set to Reveal Q3 Earnings on October 22

-

World2 months ago

World2 months agoR&B Icon D’Angelo Dies at 51, Leaving Lasting Legacy

-

Health2 months ago

Health2 months agoNorth Carolina’s Biotech Boom: Billions in New Investments

-

Entertainment2 months ago

Entertainment2 months agoRed Sox’s Bregman to Become Free Agent; Tigers Commit to Skubal