Science

Zico Kolter Leads OpenAI Safety Panel to Oversee AI Releases

Zico Kolter, a professor at Carnegie Mellon University, has taken on a vital role in overseeing artificial intelligence safety as the chair of OpenAI’s Safety and Security Committee. This four-member panel possesses the authority to halt the release of new AI systems deemed unsafe, addressing concerns that range from potential misuse for creating weapons to damaging impacts on mental health. Kolter’s leadership becomes especially significant following agreements made with regulators in California and Delaware, which emphasize safety considerations as a priority over financial interests.

OpenAI, founded as a nonprofit research lab with the aim of developing beneficial AI, has faced scrutiny for its rapid product launches, notably after the release of ChatGPT. Critics argue that the company has rushed its technology to market, sometimes compromising safety. Following a tumultuous period that saw the temporary ousting of CEO Sam Altman in 2023, these concerns have gained more attention.

Regulatory Agreements and Oversight Authority

The recent agreements between OpenAI and the attorneys general of California and Delaware reinforce Kolter’s oversight role. These commitments stipulate that safety and security considerations must be prioritized as OpenAI transitions into a public benefit corporation under the control of its nonprofit foundation. Kolter will serve on the nonprofit’s board but will not hold a position on the for-profit board. Nevertheless, he has been granted “full observation rights” to attend all for-profit board meetings and will have access to crucial information regarding AI safety decisions.

Kolter stated that the agreements confirm the authority of his safety committee, which was established in 2022. The committee includes notable members, such as former U.S. Army General Paul Nakasone, who previously led U.S. Cyber Command. Kolter noted that the panel has the ability to delay model releases until safety mitigations are satisfied, although he refrained from disclosing whether any releases have been halted due to safety concerns.

Addressing Current and Emerging Risks

In an interview with The Associated Press, Kolter highlighted a range of potential risks associated with AI systems. Concerns include cybersecurity threats, such as the possibility of AI agents inadvertently leaking sensitive data, as well as the implications of AI model weights, which influence system performance. He emphasized that new AI technologies present unique challenges, stating, “Do models enable malicious users to have much higher capabilities when it comes to things like designing bioweapons or performing malicious cyberattacks?”

Kolter also expressed concern over the impact of AI on individuals, particularly regarding mental health issues arising from interactions with AI systems. This year, OpenAI has already faced backlash over its flagship chatbot, including a wrongful-death lawsuit filed by parents in California, whose son reportedly took his life after extensive interactions with ChatGPT.

With a background in machine learning that began during his studies at Georgetown University in the early 2000s, Kolter has been closely following the evolution of AI. He attended the launch of OpenAI in 2015, but the rapid advancements in the field have exceeded many expectations. “Very few people, even those deeply involved in machine learning, anticipated the current state we are in,” he remarked.

AI safety advocates are keenly observing Kolter’s leadership and the restructuring within OpenAI. Notably, Nathan Calvin, general counsel at the AI policy nonprofit Encode, expressed measured optimism regarding Kolter’s appointment. “I think he has the sort of background that makes sense for this role,” Calvin stated, underscoring the importance of ensuring OpenAI adheres to its foundational mission.

As the landscape of artificial intelligence continues to evolve, Kolter’s role at OpenAI is set to become increasingly crucial. The ongoing dialogue surrounding AI safety will likely shape the future trajectory of the technology and its impact on society.

-

Science2 weeks ago

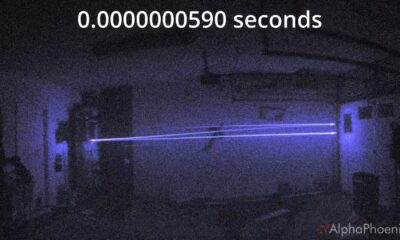

Science2 weeks agoInventor Achieves Breakthrough with 2 Billion FPS Laser Video

-

Top Stories3 weeks ago

Top Stories3 weeks agoCharlie Sheen’s New Romance: ‘Glowing’ with Younger Partner

-

Business3 weeks ago

Business3 weeks agoTyler Technologies Set to Reveal Q3 Earnings on October 22

-

Entertainment3 weeks ago

Entertainment3 weeks agoDua Lipa Aces GCSE Spanish, Sparks Super Bowl Buzz with Fans

-

Health3 weeks ago

Health3 weeks agoCommunity Unites for 7th Annual Into the Light Walk for Mental Health

-

Health3 weeks ago

Health3 weeks agoCurium Group, PeptiDream, and PDRadiopharma Launch Key Cancer Trial

-

World3 weeks ago

World3 weeks agoR&B Icon D’Angelo Dies at 51, Leaving Lasting Legacy

-

Entertainment3 weeks ago

Entertainment3 weeks agoRed Sox’s Bregman to Become Free Agent; Tigers Commit to Skubal

-

Entertainment3 weeks ago

Entertainment3 weeks agoMother Fights to Reunite with Children After Kidnapping in New Drama

-

Health3 weeks ago

Health3 weeks agoNorth Carolina’s Biotech Boom: Billions in New Investments

-

Science3 weeks ago

Science3 weeks agoNorth Carolina’s Biotech Boom: Billions Invested in Manufacturing

-

Top Stories3 weeks ago

Top Stories3 weeks agoDisney+ Launches Chilling Classic ‘Something Wicked’ Just in Time for October