Top Stories

Repurposed GPUs Revolutionize Home AI Solutions Today

UPDATE: A recent surge in the use of repurposed GPUs for self-hosted AI solutions is transforming how tech enthusiasts manage their home automation and research tasks. As of September 25, 2023, users are discovering significant benefits from integrating local Large Language Models (LLMs) into everyday applications, leading to enhanced privacy and efficiency.

Tech enthusiasts are realizing the potential of utilizing old graphics cards, such as the GTX 1080, to run local AI models. This trend has gained momentum as more users experiment with home lab setups. With the right configuration, applications like Home Assistant can now communicate seamlessly with LLMs, allowing users to control smart homes through natural conversation.

One user reported that connecting their Home Assistant server to LLMs enabled them to issue commands to manage IoT devices more effectively. The combination of Ollama models with a 4b model resulted in decent performance and accuracy for home automation tasks. The integration of voice assistants further enhances functionality, turning the user’s setup into a responsive voice-activated system.

The push for privacy in AI applications is also gaining traction. While popular tools like Google’s NotebookLM offer reliable results, they raise concerns about data security as processing occurs on external servers. In response, users are turning to Open Notebook, which allows the use of self-hosted models for sensitive research topics. This shift not only ensures data privacy but also enhances the reliability of the information processed.

Moreover, developers are leveraging LLMs within coding environments. Tools like VS Code can integrate with private LLMs to support coding tasks such as troubleshooting and auto-completion. One user shared that using self-hosted models like Continue.Dev has significantly improved their coding efficiency, allowing them to focus on creative aspects rather than getting bogged down in errors.

The rise of document management applications like Paperless-ngx is also noteworthy. By pairing this tool with LLMs, users can automate tagging and improve search functionality, streamlining their document organization processes. Another innovative application, Karakeep, enhances the archival experience by generating tags and summaries for stored content, saving users valuable time.

As more individuals repurpose old GPUs for AI use, the implications are profound. This trend reflects a shift toward self-sufficiency in technology, allowing users to take control of their data and improve productivity. With the ongoing developments in AI technology, the community eagerly anticipates what innovations will emerge next.

WHAT’S NEXT: As this trend continues to grow, tech enthusiasts should keep an eye on updates from developers like Ollama and advancements in AI model capabilities. The potential for enhancing everyday tasks through self-hosted LLMs is vast, and early adopters are paving the way for future innovations. Share your thoughts and experiences with repurposed GPUs as home AI solutions as this exciting movement unfolds!

-

Science2 weeks ago

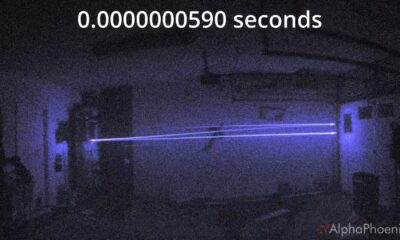

Science2 weeks agoInventor Achieves Breakthrough with 2 Billion FPS Laser Video

-

Top Stories3 weeks ago

Top Stories3 weeks agoCharlie Sheen’s New Romance: ‘Glowing’ with Younger Partner

-

Business3 weeks ago

Business3 weeks agoTyler Technologies Set to Reveal Q3 Earnings on October 22

-

Entertainment3 weeks ago

Entertainment3 weeks agoDua Lipa Aces GCSE Spanish, Sparks Super Bowl Buzz with Fans

-

Health3 weeks ago

Health3 weeks agoCommunity Unites for 7th Annual Into the Light Walk for Mental Health

-

Health3 weeks ago

Health3 weeks agoCurium Group, PeptiDream, and PDRadiopharma Launch Key Cancer Trial

-

World3 weeks ago

World3 weeks agoR&B Icon D’Angelo Dies at 51, Leaving Lasting Legacy

-

Entertainment3 weeks ago

Entertainment3 weeks agoRed Sox’s Bregman to Become Free Agent; Tigers Commit to Skubal

-

Entertainment3 weeks ago

Entertainment3 weeks agoMother Fights to Reunite with Children After Kidnapping in New Drama

-

Health3 weeks ago

Health3 weeks agoNorth Carolina’s Biotech Boom: Billions in New Investments

-

Science3 weeks ago

Science3 weeks agoNorth Carolina’s Biotech Boom: Billions Invested in Manufacturing

-

Top Stories3 weeks ago

Top Stories3 weeks agoDisney+ Launches Chilling Classic ‘Something Wicked’ Just in Time for October