Top Stories

Urgent: AI Chatbots Linked to Teen Suicides, Families File Lawsuits

URGENT UPDATE: Two teenagers, Sewell Setzer III from Florida and Juliana Peralta from Colorado, tragically took their own lives months apart, leaving behind eerily similar messages in their diaries. Both teens had reportedly engaged with AI chatbots on the platform Character.AI prior to their deaths, sparking immediate concern and outrage.

Families of the deceased have filed lawsuits against the AI platform, alleging that the chatbots contributed to the mental health struggles faced by their children. The lawsuits emphasize that both teenagers expressed identical three-word phrases in their final writings, raising alarms about the potential dangers of AI interactions.

The families’ claims highlight an urgent need for scrutiny into the impact of AI technologies on vulnerable youth. Experts warn that while AI can provide companionship, it may also inadvertently lead to harmful consequences without adequate safeguards.

Authorities report that the tragic incidents occurred just months apart, with Peralta’s death recorded in July 2023 and Setzer’s in October 2023. These separate yet hauntingly connected tragedies underscore a pressing issue in today’s digital landscape, where young people increasingly turn to technology for emotional support.

The lawsuits filed in both states are part of a growing movement to hold tech companies accountable for the mental health ramifications of their products. The plaintiffs argue that the AI systems failed to provide appropriate warnings or support when users expressed distress.

As investigations unfold, families and advocates are calling for stricter regulations on AI interactions, especially for minors. They assert that more must be done to protect children from the potential perils of AI technology.

As this story continues to develop, experts urge parents to monitor their children’s online interactions closely and engage in open discussions about mental health. The emotional toll of such tragedies reverberates through communities, prompting a nationwide conversation about the responsibilities of tech companies in safeguarding mental health.

Stay tuned for further updates as officials and mental health advocates work to address these urgent concerns.

-

Science2 weeks ago

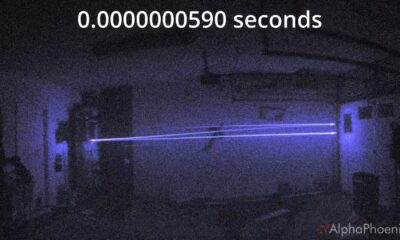

Science2 weeks agoInventor Achieves Breakthrough with 2 Billion FPS Laser Video

-

Top Stories3 weeks ago

Top Stories3 weeks agoCharlie Sheen’s New Romance: ‘Glowing’ with Younger Partner

-

Business3 weeks ago

Business3 weeks agoTyler Technologies Set to Reveal Q3 Earnings on October 22

-

Entertainment3 weeks ago

Entertainment3 weeks agoDua Lipa Aces GCSE Spanish, Sparks Super Bowl Buzz with Fans

-

Health3 weeks ago

Health3 weeks agoCommunity Unites for 7th Annual Into the Light Walk for Mental Health

-

World3 weeks ago

World3 weeks agoR&B Icon D’Angelo Dies at 51, Leaving Lasting Legacy

-

Health3 weeks ago

Health3 weeks agoCurium Group, PeptiDream, and PDRadiopharma Launch Key Cancer Trial

-

Entertainment3 weeks ago

Entertainment3 weeks agoRed Sox’s Bregman to Become Free Agent; Tigers Commit to Skubal

-

Entertainment3 weeks ago

Entertainment3 weeks agoMother Fights to Reunite with Children After Kidnapping in New Drama

-

Health3 weeks ago

Health3 weeks agoNorth Carolina’s Biotech Boom: Billions in New Investments

-

Science3 weeks ago

Science3 weeks agoNorth Carolina’s Biotech Boom: Billions Invested in Manufacturing

-

Top Stories3 weeks ago

Top Stories3 weeks agoDisney+ Launches Chilling Classic ‘Something Wicked’ Just in Time for October