Science

ETRI Proposes New AI Standards for Safety and Consumer Trust

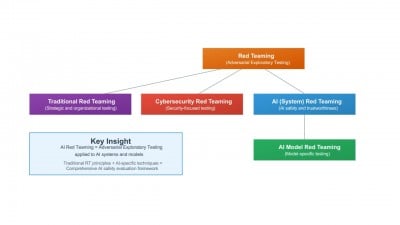

The Electronics and Telecommunications Research Institute (ETRI) has taken a significant step in the field of artificial intelligence by proposing two new standards aimed at enhancing AI safety and consumer trust. The standards, named “AI Red Team Testing” and “Trustworthiness Fact Label (TFL),” were submitted to the International Organization for Standardization (ISO/IEC) for consideration and development.

The AI Red Team Testing standard focuses on proactively identifying potential risks in AI systems before they are deployed. This initiative aims to improve the reliability and safety of AI technologies by encouraging developers to anticipate and mitigate issues that could arise during real-world applications. The proactive approach intends to address concerns surrounding AI’s impact on society, ensuring that systems are not only effective but also safe for users.

In addition, the Trustworthiness Fact Label (TFL) standard seeks to provide consumers with a clear understanding of the authenticity and reliability of AI systems. By labeling AI products with this standard, consumers will be able to make informed choices based on the trustworthiness of the technology they are using. This initiative is particularly important as AI continues to be integrated into various aspects of daily life, making transparency and accountability essential.

ETRI’s proposals are part of a broader effort to establish international norms and guidelines for AI development. As AI technologies become more prevalent, the need for standardized practices that prioritize safety and consumer trust has never been more urgent. ETRI’s involvement with ISO/IEC signals a commitment to fostering global collaboration in shaping the future of AI.

The development of these standards is expected to commence in earnest, with ETRI leading the charge. The institute, based in South Korea, has a reputation for its pioneering work in electronics and telecommunications. By advocating for these standards, ETRI aims to position itself at the forefront of global discussions on AI safety and ethics.

As the world increasingly relies on AI technologies, initiatives like the AI Red Team Testing and the Trustworthiness Fact Label are vital. They not only address potential risks but also empower consumers with the knowledge needed to navigate an evolving digital landscape. In this context, ETRI’s proactive measures serve as a model for other organizations aiming to enhance the safety and trustworthiness of AI systems worldwide.

The introduction of these standards reflects a growing recognition of the importance of responsible AI development. With significant implications for developers, consumers, and policymakers alike, ETRI’s initiatives are set to play a crucial role in shaping the future of artificial intelligence.

-

Science2 weeks ago

Science2 weeks agoInventor Achieves Breakthrough with 2 Billion FPS Laser Video

-

Top Stories2 weeks ago

Top Stories2 weeks agoCharlie Sheen’s New Romance: ‘Glowing’ with Younger Partner

-

Entertainment2 weeks ago

Entertainment2 weeks agoDua Lipa Aces GCSE Spanish, Sparks Super Bowl Buzz with Fans

-

Business2 weeks ago

Business2 weeks agoTyler Technologies Set to Reveal Q3 Earnings on October 22

-

World2 weeks ago

World2 weeks agoR&B Icon D’Angelo Dies at 51, Leaving Lasting Legacy

-

Health2 weeks ago

Health2 weeks agoNorth Carolina’s Biotech Boom: Billions in New Investments

-

Entertainment2 weeks ago

Entertainment2 weeks agoMother Fights to Reunite with Children After Kidnapping in New Drama

-

Science2 weeks ago

Science2 weeks agoNorth Carolina’s Biotech Boom: Billions Invested in Manufacturing

-

Health2 weeks ago

Health2 weeks agoCurium Group, PeptiDream, and PDRadiopharma Launch Key Cancer Trial

-

Entertainment2 weeks ago

Entertainment2 weeks agoRed Sox’s Bregman to Become Free Agent; Tigers Commit to Skubal

-

Health2 weeks ago

Health2 weeks agoCommunity Unites for 7th Annual Into the Light Walk for Mental Health

-

Top Stories2 weeks ago

Top Stories2 weeks agoDisney+ Launches Chilling Classic ‘Something Wicked’ Just in Time for October