Science

Local LLM Integration Boosts Research Efficiency with NotebookLM

Integrating local Large Language Models (LLMs) with advanced research tools like NotebookLM has proven to significantly enhance productivity for those tackling extensive projects. This innovative approach allows users to harness the structured insights of NotebookLM while maintaining the speed and privacy of a personal LLM. The outcome is a seamless workflow that maximizes efficiency and control in digital research.

Revolutionizing Research Workflows

For professionals engaged in complex research, the challenge often lies in managing vast amounts of information while ensuring accuracy. NotebookLM excels at organizing research and providing source-grounded insights, making it an essential tool. Yet, the need for a more personalized experience led to an experimental integration with a local LLM, resulting in a remarkable productivity boost.

The hybrid model combines the strengths of both platforms. While NotebookLM offers exceptional depth in contextual accuracy, a local LLM, such as the 20B variant of OpenAI’s model, provides the flexibility and speed necessary for initial knowledge acquisition. This approach allows users to draft a structured overview of complex topics, such as self-hosting applications with Docker, quickly and efficiently.

How the Integration Functions

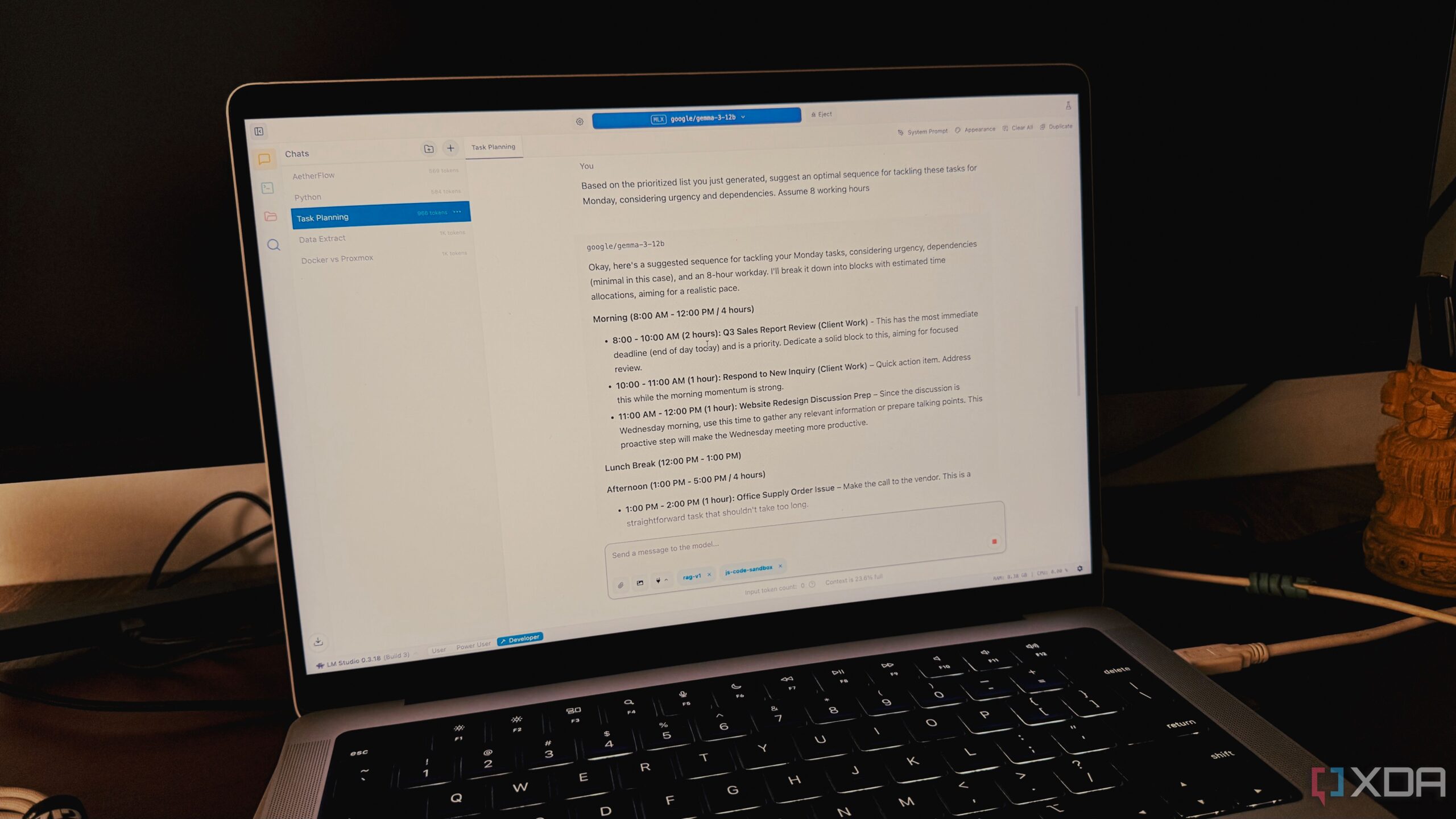

The process begins in the user’s LM Studio environment, where the local LLM generates a comprehensive primer on a specific subject. For instance, when exploring Docker, the local model can produce an overview covering essential security practices and networking fundamentals. This initial output serves as a foundation for deeper research.

After the local LLM creates the structured overview, the next step involves transferring this content into NotebookLM. The integration allows the overview to be treated as a source, enriching the research with tailored insights from previously collected materials, including PDFs, YouTube transcripts, and blog posts.

This method not only streamlines the research process but also enhances the accuracy of information retrieval. Users can pose specific questions to NotebookLM, such as identifying the essential components for successful self-hosting with Docker, and receive prompt, relevant answers.

Additional features, such as audio overview generation, further enhance productivity. By converting research into a personalized audio summary, users can engage with their findings while multitasking. Furthermore, NotebookLM’s citation capabilities ensure that every fact is traceable, minimizing the time spent on fact-checking and validation.

This hybrid approach marks a significant shift in research methodologies, allowing users to leverage the strengths of both local and cloud-based tools. The combination of speed, privacy, and contextual accuracy provides a robust framework for tackling complex projects.

As professionals continue to explore innovative ways to enhance their research workflows, the integration of local LLMs with platforms like NotebookLM emerges as a promising solution. This newfound efficiency not only saves time but also allows for a more profound engagement with the material.

In conclusion, adopting a hybrid research strategy is essential for those keen on maximizing productivity. The integration of local LLMs with NotebookLM represents a transformative approach, setting a new standard for efficient research environments. As this trend grows, users are encouraged to explore the implications of such integrations for their own projects.

-

Science3 weeks ago

Science3 weeks agoInventor Achieves Breakthrough with 2 Billion FPS Laser Video

-

Health4 weeks ago

Health4 weeks agoCommunity Unites for 7th Annual Into the Light Walk for Mental Health

-

Top Stories4 weeks ago

Top Stories4 weeks agoCharlie Sheen’s New Romance: ‘Glowing’ with Younger Partner

-

Entertainment4 weeks ago

Entertainment4 weeks agoDua Lipa Aces GCSE Spanish, Sparks Super Bowl Buzz with Fans

-

Business4 weeks ago

Business4 weeks agoTyler Technologies Set to Reveal Q3 Earnings on October 22

-

Entertainment4 weeks ago

Entertainment4 weeks agoMother Fights to Reunite with Children After Kidnapping in New Drama

-

Health4 weeks ago

Health4 weeks agoCurium Group, PeptiDream, and PDRadiopharma Launch Key Cancer Trial

-

World4 weeks ago

World4 weeks agoR&B Icon D’Angelo Dies at 51, Leaving Lasting Legacy

-

Science4 weeks ago

Science4 weeks agoNorth Carolina’s Biotech Boom: Billions Invested in Manufacturing

-

Health4 weeks ago

Health4 weeks agoNorth Carolina’s Biotech Boom: Billions in New Investments

-

Entertainment4 weeks ago

Entertainment4 weeks agoRed Sox’s Bregman to Become Free Agent; Tigers Commit to Skubal

-

Top Stories3 weeks ago

Top Stories3 weeks agoFormer Mozilla CMO Launches AI-Driven Cannabis Cocktail Brand Fast