Top Stories

Users Rush to Switch from LM Studio to Llama.cpp for AI Efficiency

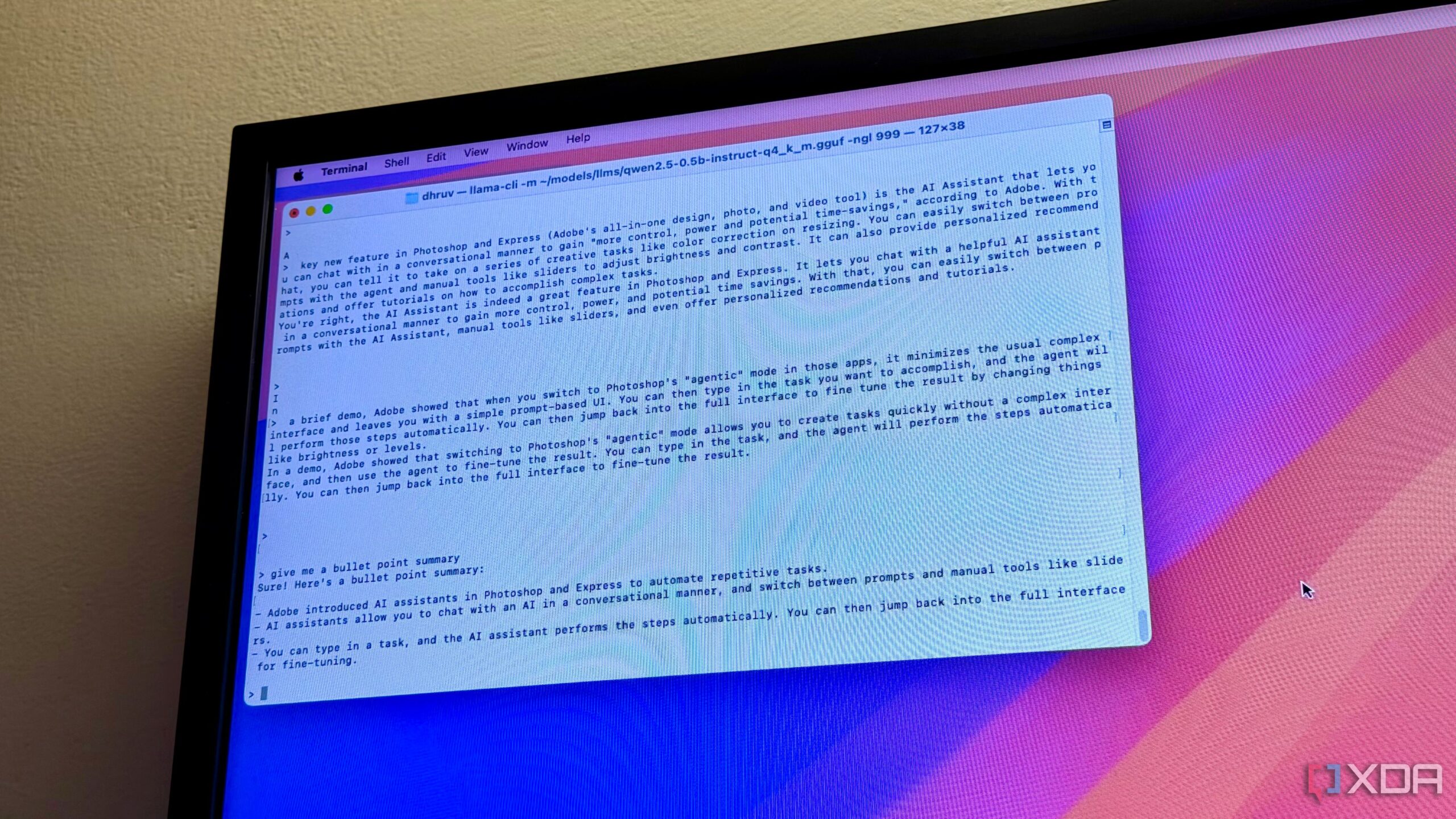

UPDATE: Users are rapidly switching from LM Studio and Ollama to Llama.cpp for enhanced control and efficiency in local AI setups. This shift, gaining momentum just this week, highlights a growing trend towards terminal-based applications that offer deeper customization and performance.

Why it matters NOW: As AI technology continues to evolve, the demand for more efficient, user-controlled environments is surging. The transition to Llama.cpp offers users direct access to their AI models, shedding the limitations of graphical user interfaces (GUIs) that can slow down performance and reduce control.

Recent reports indicate that many users initially turned to LM Studio and Ollama for their intuitive designs and ease of use. These tools excelled in helping newcomers test models quickly. However, as users sought higher performance on modest hardware, the limitations of these GUIs became apparent. As one user stated, “I wanted more control over memory constraints and token speeds on my anemic hardware.”

With Llama.cpp, the experience transforms significantly. Users now enjoy faster startup times and lower resource utilization, allowing for a more efficient AI operation tailored to individual system capabilities. This terminal-first approach strips away unnecessary layers, enhancing overall performance.

Key Benefits:

– Lean and Fast: Built in C++, Llama.cpp runs efficiently even on lower-powered systems, making it ideal for embedded systems and home servers.

– Portability: Unlike LM Studio, Llama.cpp allows seamless operation across various platforms, including macOS, Linux, and even Raspberry Pi, without extensive reconfiguration.

– Customization: Users can quantize models on-device, optimizing memory usage and performance for their specific hardware setups.

The open-source nature of Llama.cpp also stands out. While LM Studio relies on it under the hood, it does not offer the same flexibility. Switching to Llama.cpp gives users the foundation of popular GUIs without the added complexity. Developers can easily integrate it into scripts or automate tasks via API calls, providing unprecedented control over their AI applications.

As the AI landscape evolves, the shift towards terminal-based solutions like Llama.cpp reflects a broader trend of users craving not just functionality, but a deeper understanding of their tools. This development is not just about speed; it’s about empowerment in the digital age.

What to watch for: The growing community around Llama.cpp will likely lead to more resources and support for users transitioning from GUI tools. As this movement gains traction, expect a surge in tutorials, discussions, and shared experiences, enhancing the overall understanding of local AI operations.

In conclusion, the transition from LM Studio and Ollama to Llama.cpp is more than just a technical upgrade. It represents a significant shift towards user empowerment in the AI space, prioritizing speed, control, and efficiency. As more users embrace this change, the impact on local AI setups will be profound and far-reaching.

-

Science2 weeks ago

Science2 weeks agoInventor Achieves Breakthrough with 2 Billion FPS Laser Video

-

Top Stories3 weeks ago

Top Stories3 weeks agoCharlie Sheen’s New Romance: ‘Glowing’ with Younger Partner

-

Business3 weeks ago

Business3 weeks agoTyler Technologies Set to Reveal Q3 Earnings on October 22

-

Entertainment3 weeks ago

Entertainment3 weeks agoDua Lipa Aces GCSE Spanish, Sparks Super Bowl Buzz with Fans

-

Health3 weeks ago

Health3 weeks agoCommunity Unites for 7th Annual Into the Light Walk for Mental Health

-

Health3 weeks ago

Health3 weeks agoCurium Group, PeptiDream, and PDRadiopharma Launch Key Cancer Trial

-

World3 weeks ago

World3 weeks agoR&B Icon D’Angelo Dies at 51, Leaving Lasting Legacy

-

Entertainment3 weeks ago

Entertainment3 weeks agoRed Sox’s Bregman to Become Free Agent; Tigers Commit to Skubal

-

Entertainment3 weeks ago

Entertainment3 weeks agoMother Fights to Reunite with Children After Kidnapping in New Drama

-

Health3 weeks ago

Health3 weeks agoNorth Carolina’s Biotech Boom: Billions in New Investments

-

Science3 weeks ago

Science3 weeks agoNorth Carolina’s Biotech Boom: Billions Invested in Manufacturing

-

Top Stories3 weeks ago

Top Stories3 weeks agoDisney+ Launches Chilling Classic ‘Something Wicked’ Just in Time for October